As AI systems become increasingly sophisticated, not only do the lines between human cognition and machine intelligence become blurred, but also fundamental concepts such as intelligence, decision-making, truth and wisdom. The term AGI (Artificial General Intelligence), despite having recently entered the mainstream, continues to be nebulous; general intelligence here is commonly defined as the point at which AI is as capable as a human. We have initiatives such as the ARC-AGI, whose test measures not only skill itself, but skill-acquisition and generalisation. The founder, François Chollet, explains in the “On the Measure of Intelligence” that:

The intelligence of a system is a measure of its skill-acquisition efficiency over a scope of tasks, with respect to priors, experience and generalization difficulty.1

So as these benchmarks become more specific, there may be a need to also focus on concepts like wisdom alongside this. The inclusion of priors and experience in this quotation is paramount to what we perceive as wisdom - the ability to use lived experience to make informed decisions. However, the increasing capability of AI might redefine what we mean by wisdom as a uniquely human trait. In Leopold Aschenbrenner’s essay, “Situational Awareness”, he uses the term intuition to explain AI’s ability to possibly outstrip humans in terms of understanding ML:

They’ll be able to read every single ML paper ever written, have been able to deeply think about every single previous experiment ever run at the lab, learn in parallel from each of their copies, and rapidly accumulate the equivalent of millennia of experience. They’ll be able to develop far deeper intuitions about ML than any human.2

The specific word choice, often associated specifically in the domains of human consciousness, is particularly telling. AI is placed in both the subject and object position - in other words, AI being able to intuit aspects of Machine Learning with no humans in the equation. This is also apparent in the phrase “the equivalent of millennia of experience” - one major argument in the division between humans and AI, often touted as the main component of consciousness; the framework of lived experience. Can experience be as easily reduced to experiential data - in other words, reading the map for the territory? Experience is often seen as the interplay between the event itself with emotion, memory and context - even when recorded as data, it becomes abstracted through text, image and video. One could even state that the abstraction begins as soon as it is recorded as qualia through the senses before it is stored in memory. If this is the case, would data suffice for AIs to perceive or collect “wisdom”? Even if the quotation mentions AI’s superior intuition for ML, which seems relatively appropriate, what would this mean in terms of alignment with human values? Further on in the essay, Aschenbrenner states that we should:

[…] expect 100 million automated researchers each working at 100x human speed not long after we begin to be able to automate AI research. They’ll each be able to do a year’s worth of work in a few days. The increase in research effort—compared to a few hundred puny human researchers at a leading AI lab today, working at a puny 1x human speed—will be extraordinary. This could easily dramatically accelerate existing trends of algorithmic progress, compressing a decade of advances into a year. We need not postulate anything totally novel for automated AI research to intensely speed up AI progress.3

As innovative as this first appears – the ability to perpetually create the precipitating event to an intelligence explosion as AI continues to improve itself in parallel with copies of itself (eliminating the need for individual training), the removal of humans from the equation could lead to misalignment. AI development would become more rapid but more opaque at the same time, undoing the alignment work and research leading up to this point. I argue that even if this were refined to solely AI research, that the resultant take off would transform all areas of human existence outside our reasoning and control.

My proposition as it currently stands is this: if this automation boom were to occur, where AI systems rapidly advance to automate not only aspects of their own development but documentation and evidence of this, would necessitate a new framework for human/AI interaction, connection and agency.

This essay will introduce a novel conceptual structure, which I call the Hypermanifest, a cognitive space where humanity’s experiential wisdom and AI’s processing capabilities converge - a collaborative juncture at which both parties create new possibilities for decision making and problem solving. My aim for the Hypermanifest is to preserve human agency in an increasingly AI driven world - a future where humans remain integral to the decision making process alongside the continuously increasing capabilities of AI.

The Hypermanifest Explained

I will first break down the word into its two parts: Wilfrid Sellars’ dichotomy between the Manifest and Scientific and Jean Baudrillard’s Hyperreal:

The 'manifest' image of man-in-the-world can be characterized in two ways, which are supplementary rather than alternative. It is, first, the framework in terms of which man came to be aware of himself as man-in-the-world. It is the framework in terms of which, to use an existentialist turn of phrase, man first encountered himself—which is, of course, when he came to be man.4

Wilfred Sellars proposed two modes of image - the manifest as humanity’s initial conceptual framework, a connection reached through experiential human perception and the scientific as an extension beyond the immediate human experience in more technical detail. Frontier models like LLMs (Large Language Models) incorporate a blend of manifest (art, language and social practices) and scientific information (explanations that are often unseen past direct human experience). LLMs can then adopt characteristics of the manifest and develop their own interpretive models, creating a unique reality in their dialogue - reflecting the surface level aspects of human interaction and experience as abstraction. This is akin to Baudrillard’s Hyperreal - a construct where the distinction between the real and simulated blurs, leading to a realm of representation where it becomes more “real” than the original it once represented. In Baudrillard’s Simulacra and Simulation, he states that

“abstraction is no longer that of the map, the double, the mirror or the concept. Simulation is no longer that of a territory, a referential being or a substance. It is the generation by models of a real without origin and reality - a hyperreal.” 5

The Hypermanifest is therefore a conceptual interface or latent space where human and AI connect, existing outside the boundaries of the physical.

Latent Space and State Space Semantics

The concept of latent space is central to understanding how AI models process and generate insights from vast datasets. In machine learning, latent space refers to a multi-dimensional space where data points are encoded in a more abstract, compressed form. This encoding allows the model to capture underlying patterns and features, enabling sophisticated data analysis and prediction. In the context of the Hypermanifest, this latent space can be envisaged as a dynamic environment where humans and AI can play to their particular strengths: humans providing context, creativity, and ethical checks while AI processes data over time to create real-time insights.

State space semantics, on the other hand, provides a mathematical framework for representing all possible states a system can be in, along with the transitions between these states. Each state in this space corresponds to a unique configuration of variables defining the system at a given time. This framework is widely used in fields such as robotics, cognitive science, and AI to model and analyse the behaviour of complex systems.6

Specifically, for state space semantics, cognitive states can be represented as trajectories or nodes in a multidimensional space. Each of these dimensions corresponds to a particular feature of the cognitive state. In the context of the Hypermanifest, this would allow for the mapping of human intuition alongside the data-driven insights of AI, facilitating the tracking and visualisation of the convergence between AI’s processed information and human experiential wisdom.

Visualisation and Structure

Aspects of human intuition vectors could be represented as trajectories in the state space, while AI computation could be visualised as manifolds. The areas of intersection or convergence points would potentially lead to new insights and breakthroughs through emergent pathways. In this way, the Hypermanifest would serve as an extension of the cognitive process, exemplifying Active Externalism as proposed by Andy Clark and David Chalmers. In their seminal paper, “The Extended Mind”, Clark and Chalmers describe Active Externalism as a coupled system between human and an external entity that becomes a cognitive system in its own right.7 All of these components would play an active causal role and jointly govern behaviour. The Hypermanifest, in this context, would act as an external cognitive space where human intuition and AI's computational abilities converge and interact. This interaction blurs the traditional boundaries between internal cognitive processes and external tools, creating a fluid and dynamic cognitive system.

Aschenbrenner also mentions the proposal of a latent space, but without human intervention:

Vast numbers of automated AI researchers will be able to share context (perhaps even accessing each others’ latent space and so on), enabling much more efficient collaboration and coordination compared to human researchers.8

In this way, Aschenbrenner’s prediction that AI would rapidly accumulate millenia of experience makes sense in terms of the Hypermanifest - datasets that incorporate text and media that provide a hyperreal event. However, if the human is left outside of the loop altogether, then this could signal the start of a “fast takeoff” or FOOM - regardless of speed. Because of the nature of this concept, it would accelerate faster than humans can keep track, precisely of how opaque it would seemingly be. Sacrificing control for speed, as one might imagine, comes with an abundance of existential risk. The Hypermanifest, therefore, could be presented as this aforementioned latent space; just with humans being invited to the party.

This could be carried out through a highly interactive app - allowing for haptic, voice, text and multimodal communication as well as the use of Brain Computer Interfaces (BCIs) to cut down the latency between human and AI. The human contributes the context of the experiential data; the biology of emotion, specifically tuned memories and embodied narrative, while AI can provide the surface detail of many lived experiences in relation to time and circumstance. This together can create a new construct of wisdom, that I call Augmented Intuition Processes (AIPs).

Augmented Intuition Processes (AIP)

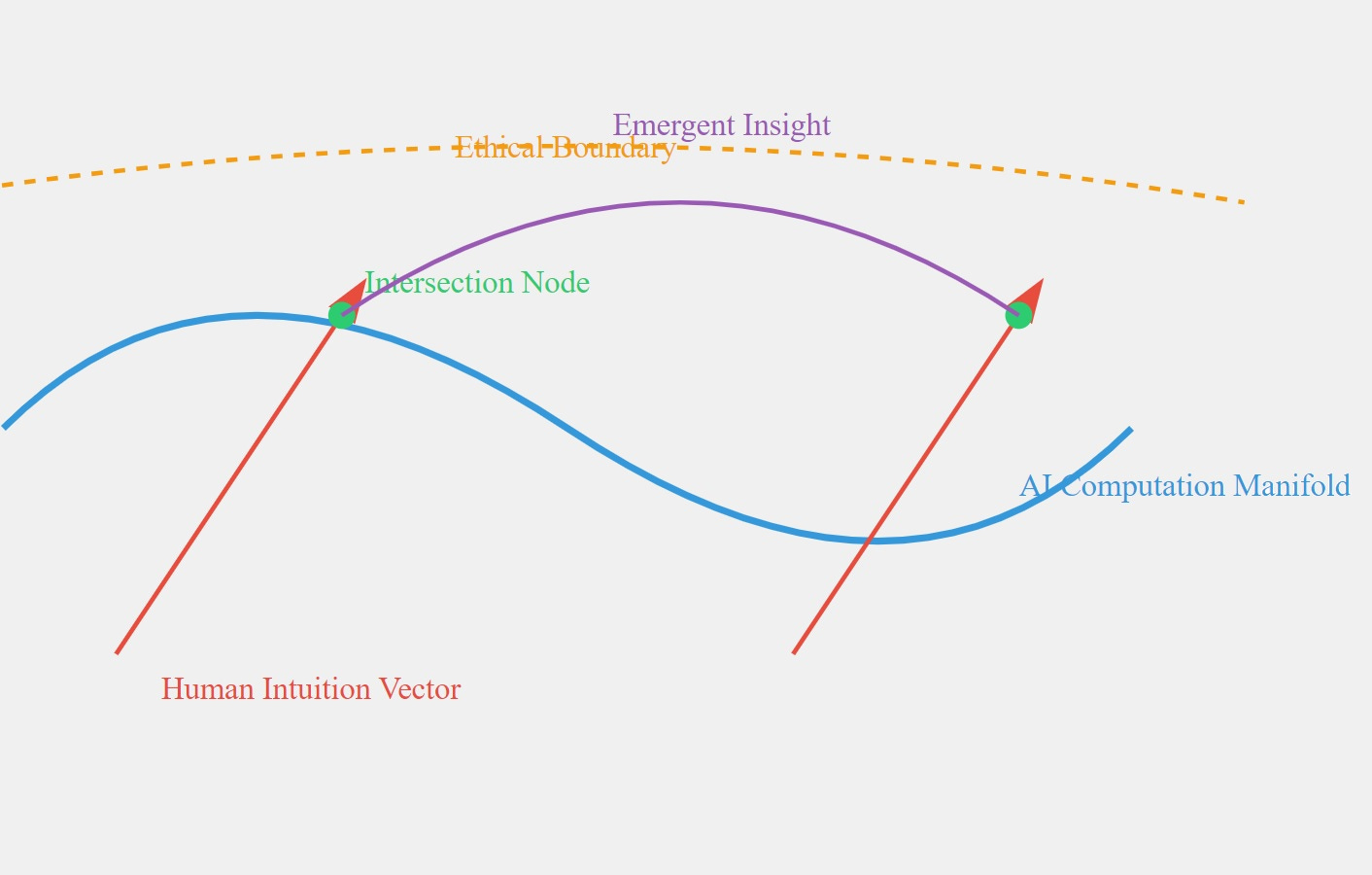

To understand the dynamics of the AIPs within the Hypermanifest better, I provide a visualisation of the interactions within a multidimensional state space:

Figure 1: An instance of an AIP in the Hypermanifest structure

AI Computation Manifold: this curve represents the landscape of AI processing capability in a 2D visualisation. In a 3D model it would be presented as a complex multidimensional surface.

Human Intuition Vectors: these arrows represent trajectories of human thought/intuition, illustrating how human insights can influence and intersect with AI processing.

Intersection Nodes: The green dots show the points at which the human intuition vectors and AI capabilities meet - the critical junctures that are the catalyst points for AIPs.

Ethical Boundary: The dashed yellow line representing the constraints that guide human intuition and AI processing, making sure that the interactions remain within moral limits.

Emergent Insight: The purple curve that connects multiple intersection nodes represents the solutions and novel ideas that become apparent from the connection of human intuition and AI cognition.

This diagram highlights that AIPs are a dynamic process that occurs at and in-between intersection nodes. An AIP initiates when a human intuition vector approaches the AI computation manifold; a process of mutual influence and exchange of information takes place. This can propagate into creating many intersection nodes and leading to emergent insights.

Example of AIPs:

In Environmental Science - a research scientist has an idea or intuition about a possible connection between cloud formation and ocean microplastics. The AI manifold analyses global climate data, atmospheric studies and ocean pollution. Upon connection of both vector and manifold (intuition and analysis), an AIP is triggered, exploring the mechanisms by which these two areas could be linked. The emergent insight then arises, the AIP generates a novel hypothesis about how microplastics alter water vapour condensations with the potential to affect global weather patterns in new ways. In this way, AIPs play to both parties strengths, allows for transparency both ways and creates a sense of accountability. This collaboration can lead to emergent ideas that can transcend what each party could produce alone.

In Creativity - an area currently under much scrutiny and controversy - there may be similar use cases. For example, a composer might try to create a new genre by combining features of both - an intuition that combines with an AI manifold consisting of vast databases that incorporate both stylistic and mood compatibilities, as well as cultural music preferences. The intersection nodes could create different harmonies, rhythms and uses of instrumentation that the composer can then use in their work.

In Education, AIPs could match a tutor’s intuition about a student’s needs with AI analysis of the student’s learning patterns as well as educational data both globally and locally. This could in turn create unique insights about the way in which they learn and adapt in real time to the student’s progress, creating a personalised curriculum.

Of course, this could be seen as a sacrifice of speed - AIs sharing a latent space without a human in the loop would allow for a much faster turnaround when it comes to processing new insights - but the Hypermanifest structure could be used for more than automating AI research specifically. Human involvement also begets accountability should the need arise and with all technologies, there is always the initial acclimatising phase that must be accounted for. There could also be concerns about privacy, especially in the case of BCIs - the flow of information between humans and AI would need to be secure. To answer this, I will call upon Helen Nissenbaum’s contextual integrity - the concept that privacy ensures the appropriate flow of information according to contextual norms, whereby certain expectations within information flow or nature would be upheld by the Hypermanifest. She explains in “Privacy as Contextual Integrity” that "Contextual integrity ties adequate protection for privacy to norms of specific contexts, demanding that information gathering and dissemination be appropriate to that context and obey the governing norms of distribution within it".9 The AI systems that make up the manifold would also be able to adapt to individual user patterns with specific privacy techniques.

Let us establish norms that would function within the structure of the Hypermanifest. These would include Purpose Limitation, where information is used solely for the intended purpose disclosed to the user, ensuring all data is context-appropriate. Data Minimisation would ensure only necessary data is collected for specific functions or interactions, maintaining flow while minimising privacy risks. Transparency and Consent norms would require users to be informed about data collection and usage, explicitly consenting to maintain trust. User Autonomy and Control would provide users with authority over their data, including rights of correction, access, and deletion. Context-specific Security Measures would tailor protections to the sensitivity of data and its context. Finally, Contextual Integrity Training would ensure all parties understand the principles of Contextual Integrity and specific Hypermanifest norms. These norms, grounded in Helen Nissenbaum's concept of contextual integrity, aim to preserve privacy and ethical standards within the dynamic environment of the Hypermanifest.

If we apply these norms to the earlier examples listed, we can see how this structure takes shape - e.g. in an educational context, only the relevant data would be collected and would be made transparent and able to be controlled by the student. In a medical related context, priority would be given to using strong encryption, limiting data use to treatment and providing clear diagnostic explanations.

These guardrails in place, as well as human involvement in general, would also help with transparency and build public trust - which is vital for the widespread adoption and use of AI technologies. This would also allow for course correction and problem solving - an issue that fully automated systems may have, specifically for complex challenges. This would also prioritise quality control and grounding of insights over raw speed - potentially leading to more sustainable and significant advances over a purely speed focused approach.

Comparison of the Hypermanifest to other Approaches

When proposing a framework such as this, it’s important to consider how it relates to other approaches in the field and to compare them.

One such example is a Human-in-the-Loop AI system, which involves human oversight, supervision and intervention in AI processes. Similar to the Hypermanifest, their goal is to leverage both human expertise and AI capabilities in decision making processes. Ge Wang, in “Humans in the Loop: The Design of Interactive AI Systems”, depicts the concept of full automation as the Big Red Button (BRB), highlighting the potential issues of no human involvement: such systems offer little user control, and if there were reasons to improve, the input may have to be different or restarted all over again.10

Examples given of HITL Design included a tool to enable users to control the level of jargon in a document, a slider that allows for gradients of choices and a composition tool to adapt iteratively to the user’s input; the human draws annotations onto a waveform to train the underlying algorithm. Therefore, we can see that HITL (Human in the Loop) positions humans externally in a more overseeing role, while the Hypermanifest consists of a more deeply integrated partnership, a symbiotic and simultaneous interaction. The Hypermanifest is also designed to be scaled, evolving with advanced AI to create specific emergent insights, which is not a typical goal of HITL systems. Despite this, both systems aim to value and preserve human agency, as well as the belief that interfaces should extend us.

Another approach is Inverse Reinforcement Learning for AI Alignment (IRL), whereby the system aims to infer human preferences and values from observed behaviour, creating a reward function that aligns AI goals with human values. Although this has a shared goal with the Hypermanifest in that AI systems act in accordance with human values, the specific focus on alignment is different from the Hypermanifest, aiming for a more comprehensive integration of human and AI cognition. Following on from this, the Hypermanifest facilitates and encourages creative leaps within the intersection nodes that might not be captured by Inverse Reinforcement Learning’s behaviour based inference.

So while both IRL and HITL systems aim to align AI to human values, the Hypermanifest aims to create a new form of collective intelligence and wisdom. This holistic and dynamic framework differs from the addition of human oversight to AI processes (HITL) and the alignment of AI with a static model of human values (IRL). By highlighting human intuition as a dynamic component, one that actively evolves as opposed to a fixed set of preferences, can we see the opportunities for both AI and humanity as a lifelong collaboration and an effective force for the future.

Bibliography

Aschenbrenner, Leopold "Situational Awareness," [https://situational-awareness.ai/from-agi-to-superintelligence/] (2024)

Baudrillard, Jean “Simulacra and Simulation” (US: University of Michigan Press, 1994 ed)

Clark, Andy and David Chalmers “The Extended Mind” Analysis, Vol. 58, No. 1 (Jan., 1998), pp. 7-19, p.8

Chollet, François"On the Measure of Intelligence," arXiv preprint arXiv:1911.01547 (2019)

Nissenbaum, Helen, Symposium, “Privacy as Contextual Integrity”, 79 Wash. L. Rev. 119 (2004) [https://digitalcommons.law.uw.edu/wlr/vol79/iss1/10]

Russell, Stuart J. and Peter Norvig, “Artificial Intelligence: A Modern Approach”, 3rd ed. (Upper Saddle River, NJ: Prentice Hall, 2009)

Wang, Ge “Humans in the Loop: The Design of Interactive AI Systems” https://hai.stanford.edu/news/humans-loop-design-interactive-ai-systems (2019)

François Chollet, "On the Measure of Intelligence," arXiv preprint arXiv:1911.01547 [https://arxiv.org/abs/1911.01547] (2019)

Leopold Aschenbrenner, "Situational Awareness," [https://situational-awareness.ai/from-agi-to-superintelligence] (2024)

Aschenbrenner, “Situational Awareness”

Wilfrid Sellars, “Empiricism and the Philosophy of Mind” (London: Routledge & Kagan Paul Ltd), p.6

Jean Baudrillard, “Simulacra and Simulation” (US: University of Michigan Press, 1994 ed), p.1

Stuart J. Russell and Peter Norvig, “Artificial Intelligence: A Modern Approach”, 3rd ed. (Upper Saddle River, NJ: Prentice Hall, 2009), p.67

Paraphrased from Andy Clark and David Chalmers, “The Extended Mind” Analysis, Vol. 58, No. 1 (Jan., 1998), pp. 7-19, p.8

Aschenbrenner, “Situational Awareness”

Helen Nissenbaum, Symposium, “Privacy as Contextual Integrity”, 79 Wash. L. Rev. 119 (2004).[https://digitalcommons.law.uw.edu/wlr/vol79/iss1/10], p.119

Ge Wang, “Humans in the Loop: The Design of Interactive AI Systems” https://hai.stanford.edu/news/humans-loop-design-interactive-ai-systems (2019)